OpenAI has drawn global attention with a series of announcements and signals about its future direction. From expanding massive infrastructure projects to preparing for advanced biological capabilities, the company shows clear ambitions to shape the next era of artificial intelligence. These plans not only highlight the scale of its vision but also the risks and debates surrounding its technology.

Investors, policymakers, and experts are closely watching each move. OpenAI’s roadmap reflects both the promise of transformative breakthroughs and the dangers that may follow if such powerful systems are misused. Understanding these developments provides a window into how AI could impact industries, security, and society in the years ahead.

Expanding Infrastructure Through Stargate and NVIDIA Partnership

OpenAI has placed infrastructure at the center of its long-term strategy. The scale of its upcoming projects suggests a push to secure computational resources for the next wave of AI models. This approach goes beyond incremental updates and signals a foundational investment in the very backbone of artificial intelligence.

The company has already confirmed the next steps for its Stargate initiative, a massive expansion of AI data centers in collaboration with Oracle and SoftBank. This project, once completed, is expected to reach near 10 gigawatts of capacity, making it one of the largest AI infrastructures ever built.

Stargate Expansion and Global Scale

The Stargate project involves building five new data center sites across the United States. According to OpenAI, these facilities will bring the total capacity to nearly 7 gigawatts, inching closer to its original 10-gigawatt target. This infrastructure is designed to handle the immense computational demands of advanced AI models, ensuring that future versions can run at scale without performance bottlenecks.

Financially, the project is one of the most ambitious in the AI sector. Reports from the Financial Times highlight the billions of dollars required for this expansion, with Oracle providing cloud expertise and SoftBank contributing capital. The sheer size of the investment demonstrates how infrastructure has become a strategic battleground in AI.

Experts note that such large-scale initiatives are essential to maintain global competitiveness. As demand for AI services grows exponentially, only companies with secured computational power will be able to innovate at the frontier.

NVIDIA Partnership and Chip Strategy

Alongside infrastructure, OpenAI has entered a strategic partnership with NVIDIA. The agreement covers the deployment of advanced NVIDIA systems in new AI data centers, with an estimated investment of up to $100 billion spread over multiple phases.

The first wave of NVIDIA-powered systems is expected to go live in the second half of 2026. These systems will enable OpenAI to run larger, more complex models across multiple modalities including text, images, and speech. NVIDIA, in turn, benefits by cementing its role as the backbone of AI hardware.

Industry analysts describe the partnership as a win-win scenario. OpenAI secures a reliable supply of cutting-edge chips, while NVIDIA locks in one of the most prominent AI companies as a long-term partner. This synergy is likely to accelerate the race to develop more powerful and efficient AI systems.

Preparing for Biological AI Capabilities

Beyond infrastructure, OpenAI has acknowledged that its future models could achieve high-level capabilities in biology. This revelation has triggered discussions about the potential benefits and risks of AI in life sciences, an area that holds promise for medicine but also dangers for misuse.

The company has integrated these concerns into its Preparedness Framework, a risk management system designed to monitor “frontier capabilities” in domains such as biology and chemistry. The framework identifies when a model crosses into “High capability” territory and demands stronger oversight.

What High Capability in Biology Means

High capability in biology refers to AI’s ability to provide significant support in biological research. This could include analyzing molecular structures, predicting chemical reactions, or even assisting in experimental design. While these abilities could revolutionize medicine and drug discovery, they also carry risks of dual use.

The concern is that such AI tools could be exploited by non-experts to conduct dangerous biological experiments. In the wrong hands, information meant for health breakthroughs could facilitate the development of biological weapons. This is why OpenAI stresses the need for early mitigation.

Experts in biosecurity warn that once AI models reach a certain threshold, controlling their misuse becomes extremely difficult. Therefore, transparency, oversight, and international collaboration are crucial in preventing unintended consequences.

Mitigation Efforts and Safety Systems

To address these risks, OpenAI is implementing a series of safeguards. The company has partnered with biologists, government agencies, and national labs to evaluate risks in real-world contexts. It has also trained its models to refuse dangerous biological requests, while allowing legitimate scientific exploration.

Another key element is the development of detection and enforcement systems. OpenAI monitors usage patterns to identify high-risk requests and applies restrictions when necessary. Red-teaming exercises, involving external security experts, are also conducted to test vulnerabilities.

Moreover, OpenAI has announced the blueprint of an “early warning system” to detect attempts at biological misuse before they escalate. This system would serve as a guardrail as AI models grow more capable in the life sciences.

Roadmap for Future Models and Product Simplification

While infrastructure and bio-capabilities are critical, OpenAI is also reshaping its product line. The company aims to unify its models, making AI more seamless for users. This shift reflects an evolution in how AI is delivered, packaged, and monetized.

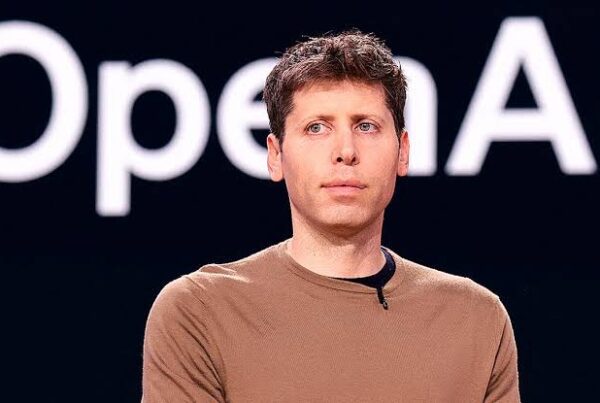

Sam Altman, CEO of OpenAI, has shared insights about the roadmap. He confirmed that GPT-4.5, internally codenamed Orion, will be the last model without advanced reasoning integration. Future versions will merge reasoning from the “o-series” into general-purpose models, eliminating the need for users to choose between specialized systems.

GPT-4.5, GPT-5, and Integration Plans

GPT-4.5 represents a transitional step. It offers improvements over GPT-4 but does not fully integrate advanced reasoning features. Altman emphasized that the real leap will occur with GPT-5, which is expected to unify different capabilities into a single model.

This means users will no longer need to select between “chat,” “reasoning,” or “multimodal” models. Instead, the system will automatically leverage the right internal mechanisms to deliver the best response. This simplification aligns with OpenAI’s goal of making AI more intuitive and less fragmented.

Industry observers believe this could improve user adoption, as complexity has long been a barrier to mainstream AI use. By merging reasoning, creativity, and multimodality into one unified platform, OpenAI hopes to set a new standard.

Open-Weight Models and Accessibility

Another major development is the possibility of releasing open-weight models. Reuters reported that OpenAI is preparing to launch models with open parameters, allowing developers to fine-tune them for specific use cases.

This move would represent a partial shift toward openness, contrasting with the closed nature of most current models. By enabling more customization, OpenAI could strengthen its developer ecosystem and compete with emerging open-source alternatives.

However, experts caution that open weights also introduce risks. With greater accessibility comes the potential for misuse, especially if powerful models fall into the wrong hands. Balancing openness with safety will be a defining challenge for OpenAI’s future.

OpenAI’s roadmap shows a company at the heart of both opportunity and controversy. From building one of the largest AI infrastructures in the world to preparing for high-risk biological capabilities, its path forward raises critical questions about governance, ethics, and global security.

The narrative of OpenAI’s future is not only about technology but also about responsibility. How the company and its partners handle these developments will shape the global trajectory of artificial intelligence. For readers who want to dive deeper into related issues, Olam News offers further coverage on AI, technology, and global policy debates.